As you all know that using UAV’s with a camera to map the environment is a very common use for drones, and there are also many tools available to stich these images together to form a high resolution map. There are also many tools for creating 3D Digital elevation models which can be used for various things like planning, measurement and GIS.

I found a great video on YouTube (thanks to gtoonstra) which shows the workflow to take your 2D images and create a 3D textures map.

Applications used for this automated process

- Visualsfm to construct a 3D model

- Model refined with CMPMVS for a more accurate mesh point cloud

- Point cloud then put into CloudCompare to resample point cloud (lower number of points)

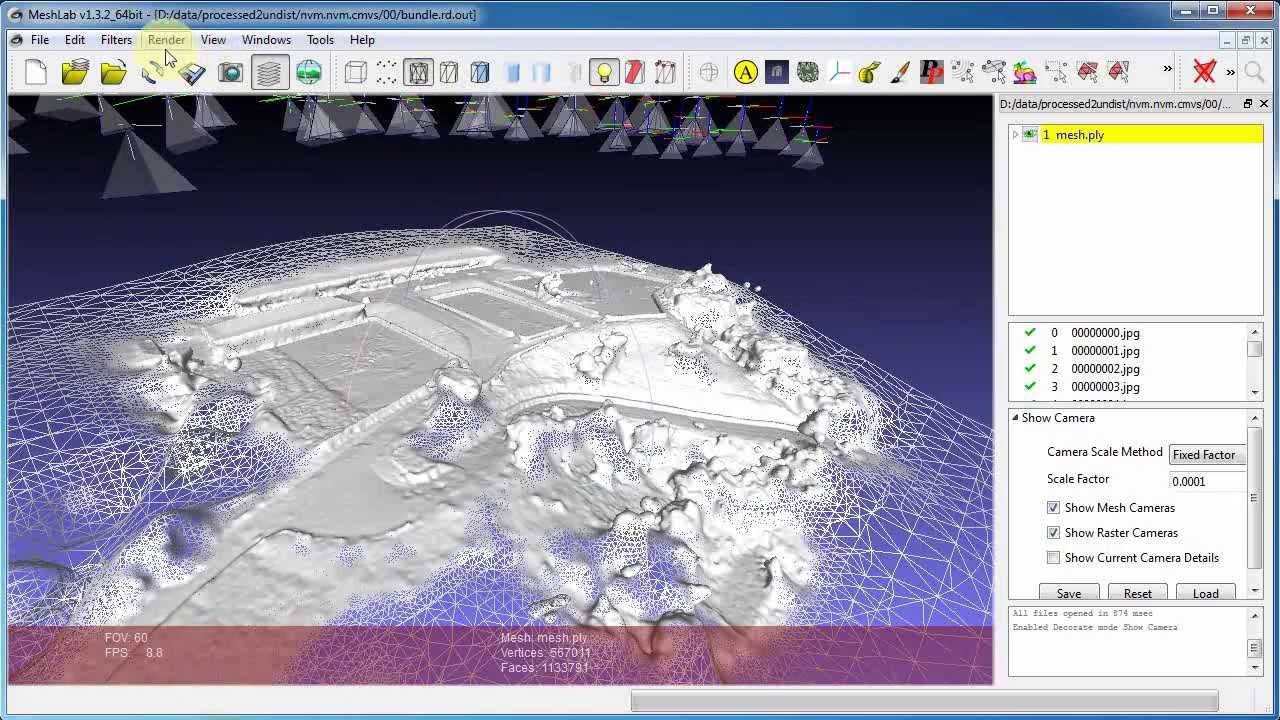

- Resampled point cloud fed inot meshlab to clean it up

- VisualSfM can also be used

- Blender is then used to merge the photos with the 3d model to create the 3D terrain

This workflow uses visualsfm to construct a 3D model, which is refined by CMPMVS to get a better and more accurate mesh. The point cloud from CMPMVS is then fed into CloudCompare, which resamples the point cloud to lower the point count. Meshlab should be able to do the same. The resampled point cloud is then processed through poissonrecon64.exe (using the screening method), which produces, usually, a very nice looking mesh. The resulting mesh is then imported into meshlab, where it can be cleaned up. VisualSfM itself can create another bundler output file using the standard pmvs2.exe application. This bundler out is only needed to get suitable camera positions for all the original images. These become “raster images” in meshlab. The new mesh is then parametrized using these raster cameras and then a texture generated by reprojecting the original photos as textures onto the resulting mesh. This creates the “texture” for the game engine model. The model is then exported in OBJ format to preserve texture UV coordinates.The final OBJ file is imported into blender and visualized with a couple of tweaks to the final textured 3D model.